- Home

- Resource Center

- Articles & Videos

- Go figure TQM - Meaningful Quality Metrics for Localization Clients

4 April 2023

| by Coupa

Go figure TQM - Meaningful Quality Metrics for Localization Clients

Sign up for our newsletter on globalization and localization matters.

Please note that this article was written after I participated in the GALA Academy sessions on “How to (Re)Design Translation Quality Management for 2023 & Beyond” in January and February 2023, so my conclusions and my current knowledge also significantly draw on the invaluable insights shared by the leading translation quality experts who drove those sessions.

No doubt: Almost endless statistical figures are available that capture and reflect the quality of a given translation from different viewpoints and in different granularities. LSPs should commit to widely accepted quality standards, and most of them (approx. 75% in 2020 according to Slator) also hold at least one quality management-related certification. As truly pointed out by many quality management experts, quality standards and the LSPs’ commitment to those standards, should not be the providers’ responsibility alone, clients need to be aware of quality standards and requirements to a certain extent as well. Clients cannot be expected to be experts in the different relevant standards that may be relevant for their work since they just provide too much information in terms of volume (may require reading of up to 200 pages or more), too many often irrelevant details, and last but not least, they are way too technical as they are legal frameworks after all. Like in the case of ISO 9001, apart from the general framework, sometimes sector-specific applications of the standard are available on top of the generic standard.

The decision about which LSP is the right partner for your localization needs is a very complex process. And while the question of whether an LSP holds a certification should not be the only decisive factor, it certainly tells you if your potential partner is familiar with and has actively implemented best practices and common standards. In other words, this reflects a vendor’s commitment to the basic principles of quality management.

Standards and frameworks relevant to the localization industry

Major quality standards:

ISO 9001 (Passed the “warrant of fitness” in June 2021, which was attested by the ISO subcommittee in charge of SC 2 (Quality System)

ISO/DIS 5060 (Under development - This document provides guidance for the evaluation of human translation output, post-edited machine translation output, and unedited machine translation output. Its focus lies on an analytic translation evaluation method using error types and penalty points configured to produce an error score and a quality rating.)

Revision of F2575-14 Standard Guide for Quality Assurance In Translation

ISO 18587 (Machine Translation Post-Editing)

Translation quality metrics

SAE J2450 Translation Quality Metric

At this point, I need to go back to the question of how quality is defined. Since it is a core topic for the localization industry and a fundamental one for this article, I would like to spend a few minutes and sentences on establishing the basic concept and its relevance for localization.

How would you define quality in translations?

ISO standard 9000 defines quality as the “degree to which a set of inherent characteristics [...] of an object [...] fulfills requirements [...]”.

I added a definition from ChatGPT for fun:

Quality in translations can be defined as the degree to which a translation accurately and effectively conveys the meaning and intent of the source text in the target language, while also meeting the specific requirements and expectations of the intended audience.

There is a consensus among translation quality experts and aficionados that quality in translations cannot only be perceived as the absence of linguistic errors from a transcendent perspective, but that it must also be seen against the backdrop of different factors such as requirements, specifications, customer (stakeholder) needs, cost-benefit considerations, and other key factors. In a nutshell, depending on the requirements or expectations on the translation, it can still be considered a quality translation even if it’s not free of linguistic errors.

Our quest for quality metrics

After ascertaining what quality actually means both in general and, specifically, for the localization industry, I would like to discuss one of the biggest challenges that my team is facing apart from our “everyday tasks”.

Being a team within a big organization, there are many things to consider apart from fulfilling our “day-to-day” tasks: How can we present our achievements, how can we quantify and qualify our success, and how can we justify our costs? It is often difficult to convert localization investments into actual return figures, which any, not just profit-oriented, company can relate to; this is a recurring topic and a well-known challenge. Whenever we want to present our team achievements, we see how many translations we have done, for example, for the Sales teams, but we rarely get to see the direct outcome of any given initiative. Could a deal close as a win after or because we helped translate an RFP/RFQ? And how can our contribution be converted to a return on investment (ROI) figure?

As we all know, statistics, figures, ratios, metrics, indicators, etc. tell it all. We hunger for them. Since it’s impossible to identify the part a translation plays in a deal (if this is acknowledged in the first place), we usually gather other indicators to show our performance and achievements (savings being just one of them). And it goes without saying that different clients and organizations have different expectations of their KPIs.

Challenges of a Language Quality Manager

In my role as Language Quality Manager, I am in charge of quality management and assurance, which include running quality checks, managing reviews, gathering feedback (reviewer reports, customer and internal tickets), evaluating and qualifying customer feedback before introducing a change (as customers are not linguists and often do not see the whole picture), assigning corrective and preventive actions to the translators, making sure that all files and TMs were updated correctly, amending the terminology… and keeping track of it all. Yes, it is a pretty long list.

So, recently, I started looking into different metrics in relation to quality that would be helpful to quantify quality assurance and evaluation activities, and that can also convince other teams who are not familiar with localization processes (not least Finance), of the necessity of investing in various programs and/or tools.

What we are currently tracking

In this quest for data, I am currently tracking the number of linguistic fixes which can be reduced by assessing and improving the translation quality proactively, e.g., by running in-context reviews of the most used screens or functions. This also reduces the time different people on different teams need to spend on creating a ticket in JIRA, providing screenshots in source and target language, specifying what the current translation is and what it needs to be replaced with (target or desired result). As anyone who has worked for a software firm knows well, just filling in the mandatory fields in a JIRA ticket takes significant time and resources, so the time saved and, correspondingly the costs saved, could be another meaningful metric that can be included in a presentation/QBR. These results should not just be presented as absolute numbers but if possible, also in relation to, for example, previous quarterly or annual results, so that it’s easy to observe the evolution (hopefully, for the better). And, if the number of fixes has increased while the number of customer complaints/tickets went down, you will have a compelling argument in favor of continuing to introduce quality improvement initiatives.

Technological limitations

Then, there is also the limitation of what metrics I am able to calculate or capture at my end: What metrics can our TMS provide? Provided that the project workflows have been configured accordingly and additional apps have been installed, we can basically run LQAs and even define the quality dimensions that we consider to be relevant ourselves, or use one of the common models in the industry below:

● TAUS DQF-MQM

● LISA

● SAE J2450

However, I find that those scores only have limited informational (at best) value for people outside of the Localization team, and I am not even sure how relevant they are for other members on the Localization team. And, even if I wanted to include easily comprehensible Pass/Fail results for the different languages, there is still the question of “Is it worth spending money on improving a locale that is not one of the company’s major markets/targets?”

Possible solutions are provided in the “Scalability” section below. What can and should be said however is - and that’s what all quality managers need to come to terms with, especially those who consider themselves to be perfectionists, which in fact we all are - that in less than ideal circumstances, we need to be realistic and practical and accept the given limitations: we don’t have the time, the money, nor the resources to do it all. We need to focus on the top-priority requirements instead.

Figures to back up localization efforts

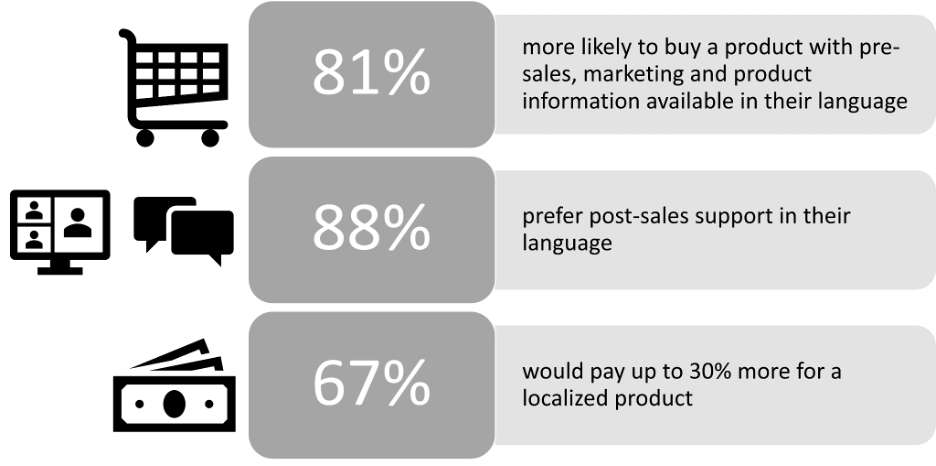

The quest for quality metrics is not entirely disconnected from the subject of general translation KPIs which has been explored by many experts already. Why a training video or website should be offered in local(ized) versions, can be justified by meeting actual needs, official or even internal surveys asking if a product would be more accepted in one’s own language, so it may be possible to obtain actual figures. I’m sure you are familiar with the following results of the “Can't Read, Won't Buy Research Series” published by the CSA (Common Sense Advisory) in 2020:

And if the translations were linguistically defective, this could have an impact on user experience and adoption of the localized software, or even undermine the customer’s trust in the provider’s product and professionalism.

There are varying degrees of quality issues as well: If a software user sees an occasional terminological inconsistency or a spelling error here and there, they most probably will not stop using the product. But, if the user has problems finding their way around the UI, is unable to understand the information or instructions provided, and ends up effectively “blocked” due to a poor localization, they may even reconsider their buying decision, or, at the very least, give hell to your support and account contacts, which could turn into a silent productivity and money black hole.

Metrics worth considering

A way to transform the arguments justifying quality assurance and quality management into actual metrics could be to run a survey among customers and stakeholders to capture their satisfaction, where they see deficits, where they share their key acceptance criteria, etc. How many questions should be included in that survey, how much time the respondents can and would spend on taking the survey, and above all, how much time our team can spend on analyzing the results - these are questions that need to be explored further. It would make sense to measure customer satisfaction over time and to compare the outcome before and after significant quality improvement efforts.

But a distinction needs to be made between bugs that are caused by localization on the one hand and by programming on the other hand; that’s hard for users to distinguish and this can (hopefully) be avoided by asking the right questions. So, the downside of surveys is that they may create a lot of administrative overhangs or even noise without representing the real sentiments among users or stakeholders.

Scalability

Having talked about what metrics I would like to capture, now (corporate) reality kicks in. What is in fact achievable and scalable? The following compromises on quality can be made:

➢ If x number of languages have failed the LQA and the budget for improvement efforts and fixes is limited, one could focus on the major languages (tier 1 and 2 languages) first since these markets create the highest revenues, which

a) creates more value out of our ongoing investment

b) may lead to higher losses if an entire region is dissatisfied over a longer period of time and sees many contracts not renewed or canceled in the worst case

➢ Instead of reviewing and improving the entire product, it would be advisable to focus on those parts with the highest visibility (more information on sampling can be found here)

➢ When performing an internal LQA, the categorization of any errors found by the reviewers requires additional time. So, to keep the time spent and the correlated costs low, and also not to exasperate the reviewers (given that the reviewers are professional translators, SMEs may not be fit nor have the extra time for error categorization), I decided to use my own, customized quality model with fewer error dimensions which should be able to provide sufficient results.

➢ When large volumes need to be translated within a short timeline, machine translation can be a good alternative to human translations. Sometimes having something is better than having nothing. For some types of localization, it is a feasible solution. And it is still possible to add quality layers depending on the time and cost restrictions, one could opt for MTPE for the most used or visible elements for instance, or have those parts even human-reviewed to prevent blatant failures.

Conclusion

While I am capturing and using a few quality metrics, more should and will be added. One of the takeaways from past QBRs/presentations and from the GALA Academy on Quality Management in January/February 2023, and from my experience working as Quality Manager in our Localization team, is to not just present absolute figures, but to always put them in relation to comparable data, e.g., to show developments, trends, etc. This probably applies universally to all localization buyers across different industries.

Of course, what may be an irrelevant metric for some companies and Localization teams, may be one of the key data for another. This always depends on the company, the industry, and the teams targeted. As mentioned above, tracking errors and especially the error categorization in our translations is highly relevant for myself and translators alike, but less so for my colleagues from Finance.

Keep it real

Sometimes we cannot afford to strive for perfection but need to focus on delivering results instead. That’s a lesson I learned in my role as quality manager: The risk involved in delivering a Marketing brochure or documentation of poorer linguistic quality in my industry is low in comparison to the pharmaceutical or health industry where lives can be at stake.

Keep it meaningful

What applies to most real-life situations, applies to localization as well: It’s advisable to keep the number of metrics and KPIs low, since capturing, tracking, updating, and arranging them requires much time and effort, apart from the fact that presenting too many figures can be very tiring to the target audience. It’s better to just track selected data and to “help yourself” and vary your choice depending on the current purpose and goal you want to achieve.

If all of the requirements and specifications are met, a quality manager did a good job, while of course, we should never tire of finding ways to improve current processes and linguistic quality in deficient languages. It’s not just the translators and language partners, also clients can and should influence the quality of localization to achieve the common goal - to make everyone involved successful.

Do you want to contribute with an article, a blog post or a webinar?

We’re always on the lookout for informative, useful and well-researched content relative to our industry.

Nadine Lai

Languages have always played a big role in my life since I come from a mixed family in which up to 8 languages are spoken (this does not even include the different Chinese dialects spoken in my family). I embraced the opportunity to become a language quality manager in Coupa's Localization team in 2021, which is a highly dynamic and often challenging role - it certainly never gets dull. I live in Cologne (Germany) with my husband and daughter, and am still waiting for an offer to oversee the quality management of our city's beautiful Cathedral's maintenance - which I might even decline ;-)